BigQuery

https://cloud.google.com/bigquery

Install

Install Ibis and dependencies for the BigQuery backend:

Install with the bigquery extra:

pip install 'ibis-framework[bigquery]'And connect:

import ibis

con = ibis.bigquery.connect()- 1

- Adjust connection parameters as needed.

Install for BigQuery:

conda install -c conda-forge ibis-bigqueryAnd connect:

import ibis

con = ibis.bigquery.connect()- 1

- Adjust connection parameters as needed.

Install for BigQuery:

mamba install -c conda-forge ibis-bigqueryAnd connect:

import ibis

con = ibis.bigquery.connect()- 1

- Adjust connection parameters as needed.

Connect

ibis.bigquery.connect

con = ibis.bigquery.connect(

project_id="ibis-bq-project",

dataset_id="testing",

)ibis.bigquery.connect is a thin wrapper around ibis.backends.bigquery.Backend.do_connect.

Connection Parameters

do_connect

do_connect(self, project_id=None, dataset_id='', credentials=None, application_name=None, auth_local_webserver=True, auth_external_data=False, auth_cache='default', partition_column='PARTITIONTIME', client=None, storage_client=None, location=None)

Create a Backend for use with Ibis.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

project_id |

str | None | A BigQuery project id. | None |

dataset_id |

str | A dataset id that lives inside of the project indicated by project_id. |

'' |

credentials |

google.google.auth.google.auth.credentials.google.auth.credentials.Credentials | None | Optional credentials. | None |

application_name |

str | None | A string identifying your application to Google API endpoints. | None |

auth_local_webserver |

bool | Use a local webserver for the user authentication. Binds a webserver to an open port on localhost between 8080 and 8089, inclusive, to receive authentication token. If not set, defaults to False, which requests a token via the console. | True |

auth_external_data |

bool | Authenticate using additional scopes required to query external data sources <https://cloud.google.com/bigquery/external-data-sources>_, such as Google Sheets, files in Google Cloud Storage, or files in Google Drive. If not set, defaults to False, which requests the default BigQuery scopes. |

False |

auth_cache |

str | Selects the behavior of the credentials cache. 'default' Reads credentials from disk if available, otherwise authenticates and caches credentials to disk. 'reauth' Authenticates and caches credentials to disk. 'none' Authenticates and does not cache credentials. Defaults to 'default'. |

'default' |

partition_column |

str | None | Identifier to use instead of default _PARTITIONTIME partition column. Defaults to 'PARTITIONTIME'. |

'PARTITIONTIME' |

client |

google.google.Client | None | A Client from the google.cloud.bigquery package. If not set, one is created using the project_id and credentials. |

None |

storage_client |

google.google.BigQueryReadClient | None | A BigQueryReadClient from the google.cloud.bigquery_storage_v1 package. If not set, one is created using the project_id and credentials. |

None |

location |

str | None | Default location for BigQuery objects. | None |

Returns

| Type | Description |

|---|---|

| ibis.backends.bigquery.Backend | An instance of the BigQuery backend. |

ibis.connect URL format

In addition to ibis.bigquery.connect, you can also connect to BigQuery by passing a properly-formatted BigQuery connection URL to ibis.connect:

con = ibis.connect(f"bigquery://{project_id}/{dataset_id}")This assumes you have already authenticated via the gcloud CLI.

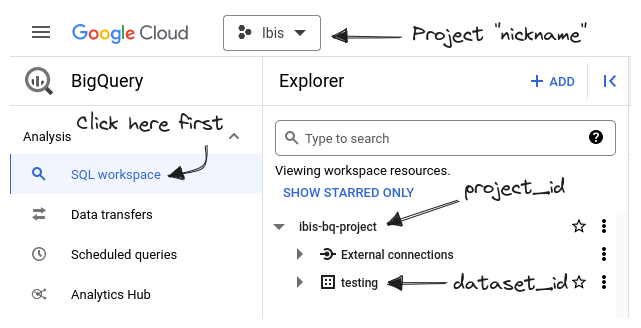

Finding your project_id and dataset_id

Log in to the Google Cloud Console to see which project_ids and dataset_ids are available to use.

BigQuery Authentication

The simplest way to authenticate with the BigQuery backend is to use Google’s gcloud CLI tool.

Once you have gcloud installed, you can authenticate to BigQuery (and other Google Cloud services) by running

gcloud auth loginFor any authentication problems, or information on other ways of authenticating, see the gcloud CLI authorization guide.

bigquery.Backend

add_operation

add_operation(self, operation)

Add a translation function to the backend for a specific operation.

Operations are defined in ibis.expr.operations, and a translation function receives the translator object and an expression as parameters, and returns a value depending on the backend.

compile

compile(self, expr, limit=None, params=None, **_)

Compile an Ibis expression.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

expr |

ibis.ibis.Expr | Ibis expression | required |

limit |

str | None | For expressions yielding result sets; retrieve at most this number of values/rows. Overrides any limit already set on the expression. | None |

params |

collections.abc.Mapping[ibis.ibis.Expr, typing.Any] | None | Named unbound parameters | None |

Returns

| Type | Description |

|---|---|

| typing.Any | The output of compilation. The type of this value depends on the backend. |

connect

connect(self, *args, **kwargs)

Connect to the database.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

*args |

Mandatory connection parameters, see the docstring of do_connect for details. |

() |

|

**kwargs |

Extra connection parameters, see the docstring of do_connect for details. |

{} |

Notes

This creates a new backend instance with saved args and kwargs, then calls reconnect and finally returns the newly created and connected backend instance.

Returns

| Type | Description |

|---|---|

| ibis.backends.base.BaseBackend | An instance of the backend |

create_schema

create_schema(self, name, database=None, force=False, collate=None, **options)

Create a schema named name in database.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

name |

str | Name of the schema to create. | required |

database |

str | None | Name of the database in which to create the schema. If None, the current database is used. |

None |

force |

bool | If False, an exception is raised if the schema exists. |

False |

create_table

create_table(self, name, obj=None, *, schema=None, database=None, temp=False, overwrite=False, default_collate=None, partition_by=None, cluster_by=None, options=None)

Create a table in BigQuery.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

name |

str | Name of the table to create | required |

obj |

pandas.pandas.DataFrame | pyarrow.pyarrow.Table | ibis.ibis.Table | None | The data with which to populate the table; optional, but one of obj or schema must be specified |

None |

schema |

ibis.ibis.Schema | None | The schema of the table to create; optional, but one of obj or schema must be specified |

None |

database |

str | None | The BigQuery dataset in which to create the table; optional | None |

temp |

bool | Whether the table is temporary | False |

overwrite |

bool | If True, replace the table if it already exists, otherwise fail if the table exists |

False |

default_collate |

str | None | Default collation for string columns. See BigQuery’s documentation for more details: https://cloud.google.com/bigquery/docs/reference/standard-sql/collation-concepts | None |

partition_by |

str | None | Partition the table by the given expression. See BigQuery’s documentation for more details: https://cloud.google.com/bigquery/docs/reference/standard-sql/data-definition-language#partition_expression | None |

cluster_by |

collections.abc.Iterable[str] | None | List of columns to cluster the table by. See BigQuery’s documentation for more details: https://cloud.google.com/bigquery/docs/reference/standard-sql/data-definition-language#clustering_column_list | None |

options |

collections.abc.Mapping[str, typing.Any] | None | BigQuery-specific table options; see the BigQuery documentation for details: https://cloud.google.com/bigquery/docs/reference/standard-sql/data-definition-language#table_option_list | None |

Returns

| Type | Description |

|---|---|

| Table | The table that was just created |

create_view

create_view(self, name, obj, *, schema=None, database=None, overwrite=False)

Create a new view from an expression.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

name |

str | Name of the new view. | required |

obj |

ibis.ibis.Table | An Ibis table expression that will be used to create the view. | required |

database |

str | None | Name of the database where the view will be created, if not provided the database’s default is used. | None |

overwrite |

bool | Whether to clobber an existing view with the same name | False |

Returns

| Type | Description |

|---|---|

| Table | The view that was created. |

database

database(self, name=None)

Return a Database object for the name database.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

name |

str | None | Name of the database to return the object for. | None |

Returns

| Type | Description |

|---|---|

| ibis.backends.base.Database | A database object for the specified database. |

drop_schema

drop_schema(self, name, database=None, force=False, cascade=False)

Drop a BigQuery dataset.

drop_table

drop_table(self, name, *, schema=None, database=None, force=False)

Drop a table.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

name |

str | Name of the table to drop. | required |

database |

str | None | Name of the database where the table exists, if not the default. | None |

force |

bool | If False, an exception is raised if the table does not exist. |

False |

drop_view

drop_view(self, name, *, schema=None, database=None, force=False)

Drop a view.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

name |

str | Name of the view to drop. | required |

database |

str | None | Name of the database where the view exists, if not the default. | None |

force |

bool | If False, an exception is raised if the view does not exist. |

False |

execute

execute(self, expr, params=None, limit='default', **kwargs)

Compile and execute the given Ibis expression.

Compile and execute Ibis expression using this backend client interface, returning results in-memory in the appropriate object type

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

expr |

Ibis expression to execute | required | |

limit |

Retrieve at most this number of values/rows. Overrides any limit already set on the expression. | 'default' |

|

params |

Query parameters | None |

|

kwargs |

Extra arguments specific to the backend | {} |

Returns

| Type | Description |

|---|---|

| pandas.pandas.DataFrame | pandas.pandas.Series | scalar | Output from execution |

fetch_from_cursor

fetch_from_cursor(self, cursor, schema)

Fetch data from cursor.

get_schema

get_schema(self, name, schema=None, database=None)

has_operation

has_operation(cls, operation)

list_schemas

list_schemas(self, like=None, database=None)

List existing schemas in the current connection.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

like |

str | None | A pattern in Python’s regex format to filter returned schema names. | None |

database |

str | None | The database to list schemas from. If None, the current database is searched. |

None |

Returns

| Type | Description |

|---|---|

| list[str] | The schema names that exist in the current connection, that match the like pattern if provided. |

list_tables

list_tables(self, like=None, database=None, schema=None)

List the tables in the database.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

like |

str | None | A pattern to use for listing tables. | None |

database |

str | None | The database (project) to perform the list against. | None |

schema |

str | None | The schema (dataset) inside database to perform the list against. ::: {.callout-warning} ## schema refers to database hierarchy The schema parameter does not refer to the column names and types of table. ::: |

None |

raw_sql

raw_sql(self, query, results=False, params=None)

Execute a query string and return the cursor used for execution.

.sql instead

If your query is a SELECT statement you can use the backend .sql method to avoid having to manually release the cursor returned from this method.

You do not need to call .close() on the cursor when running DDL or DML statements like CREATE, INSERT or DROP, only when using SELECT statements.

To release a cursor, call the close method on the returned cursor object.

You can close the cursor by explicitly calling its close method:

cursor = con.raw_sql("SELECT ...")

cursor.close()Or you can use a context manager:

with con.raw_sql("SELECT ...") as cursor:

...Parameters

| Name | Type | Description | Default |

|---|---|---|---|

query |

str | SQL query string | required |

Examples

>>> con = ibis.connect("duckdb://")

>>> with con.raw_sql("SELECT 1") as cursor:

... result = cursor.fetchall()

>>> result

[(1,)]

>>> cursor.closed

Trueread_csv

read_csv(self, path, table_name=None, **kwargs)

Read CSV data into a BigQuery table.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

path |

str | pathlib.Path | Path to a CSV file on GCS or the local filesystem. Globs are supported. | required |

table_name |

str | None | Optional table name | None |

kwargs |

typing.Any | Additional keyword arguments passed to google.cloud.bigquery.LoadJobConfig. |

{} |

Returns

| Type | Description |

|---|---|

| Table | An Ibis table expression |

read_delta

read_delta(self, source, table_name=None, **kwargs)

Register a Delta Lake table in the current database.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

source |

str | pathlib.Path | The data source. Must be a directory containing a Delta Lake table. | required |

table_name |

str | None | An optional name to use for the created table. This defaults to a sequentially generated name. | None |

**kwargs |

typing.Any | Additional keyword arguments passed to the underlying backend or library. | {} |

Returns

| Type | Description |

|---|---|

| ibis.ibis.Table | The just-registered table. |

read_json

read_json(self, path, table_name=None, **kwargs)

Read newline-delimited JSON data into a BigQuery table.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

path |

str | pathlib.Path | Path to a newline-delimited JSON file on GCS or the local filesystem. Globs are supported. | required |

table_name |

str | None | Optional table name | None |

kwargs |

typing.Any | Additional keyword arguments passed to google.cloud.bigquery.LoadJobConfig. |

{} |

Returns

| Type | Description |

|---|---|

| Table | An Ibis table expression |

read_parquet

read_parquet(self, path, table_name=None, **kwargs)

Read Parquet data into a BigQuery table.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

path |

str | pathlib.Path | Path to a Parquet file on GCS or the local filesystem. Globs are supported. | required |

table_name |

str | None | Optional table name | None |

kwargs |

typing.Any | Additional keyword arguments passed to google.cloud.bigquery.LoadJobConfig. |

{} |

Returns

| Type | Description |

|---|---|

| Table | An Ibis table expression |

reconnect

reconnect(self)

Reconnect to the database already configured with connect.

register_options

register_options(cls)

Register custom backend options.

rename_table

rename_table(self, old_name, new_name)

Rename an existing table.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

old_name |

str | The old name of the table. | required |

new_name |

str | The new name of the table. | required |

set_database

set_database(self, name)

sql

sql(self, query, schema=None, dialect=None)

Convert a SQL query to an Ibis table expression.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

query |

str | SQL string | required |

schema |

ibis.ibis.Schema | None | The expected schema for this query. If not provided, will be inferred automatically if possible. | None |

dialect |

str | None | Optional string indicating the dialect of query. The default value of None will use the backend’s native dialect. |

None |

Returns

| Type | Description |

|---|---|

| Table | Table expression |

table

table(self, name, database=None, schema=None)

Construct a table expression.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

name |

str | Table name | required |

database |

str | None | Database name | None |

Returns

| Type | Description |

|---|---|

| Table | Table expression |

to_csv

to_csv(self, expr, path, *, params=None, **kwargs)

Write the results of executing the given expression to a CSV file.

This method is eager and will execute the associated expression immediately.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

expr |

ibis.ibis.Table | The ibis expression to execute and persist to CSV. | required |

path |

str | pathlib.Path | The data source. A string or Path to the CSV file. | required |

params |

collections.abc.Mapping[ibis.ibis.Scalar, typing.Any] | None | Mapping of scalar parameter expressions to value. | None |

kwargs |

typing.Any | Additional keyword arguments passed to pyarrow.csv.CSVWriter | {} |

https |

required |

to_delta

to_delta(self, expr, path, *, params=None, **kwargs)

Write the results of executing the given expression to a Delta Lake table.

This method is eager and will execute the associated expression immediately.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

expr |

ibis.ibis.Table | The ibis expression to execute and persist to Delta Lake table. | required |

path |

str | pathlib.Path | The data source. A string or Path to the Delta Lake table. | required |

params |

collections.abc.Mapping[ibis.ibis.Scalar, typing.Any] | None | Mapping of scalar parameter expressions to value. | None |

kwargs |

typing.Any | Additional keyword arguments passed to deltalake.writer.write_deltalake method | {} |

to_pandas

to_pandas(self, expr, *, params=None, limit=None, **kwargs)

Execute an Ibis expression and return a pandas DataFrame, Series, or scalar.

This method is a wrapper around execute.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

expr |

ibis.ibis.Expr | Ibis expression to execute. | required |

params |

collections.abc.Mapping[ibis.ibis.Scalar, typing.Any] | None | Mapping of scalar parameter expressions to value. | None |

limit |

int | str | None | An integer to effect a specific row limit. A value of None means “no limit”. The default is in ibis/config.py. |

None |

kwargs |

typing.Any | Keyword arguments | {} |

to_pandas_batches

to_pandas_batches(self, expr, *, params=None, limit=None, chunk_size=1000000, **kwargs)

Execute an Ibis expression and return an iterator of pandas DataFrames.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

expr |

ibis.ibis.Expr | Ibis expression to execute. | required |

params |

collections.abc.Mapping[ibis.ibis.Scalar, typing.Any] | None | Mapping of scalar parameter expressions to value. | None |

limit |

int | str | None | An integer to effect a specific row limit. A value of None means “no limit”. The default is in ibis/config.py. |

None |

chunk_size |

int | Maximum number of rows in each returned DataFrame batch. This may have no effect depending on the backend. |

1000000 |

kwargs |

typing.Any | Keyword arguments | {} |

Returns

| Type | Description |

|---|---|

| collections.abc.Iterator[pandas.pandas.DataFrame] | An iterator of pandas DataFrames. |

to_parquet

to_parquet(self, expr, path, *, params=None, **kwargs)

Write the results of executing the given expression to a parquet file.

This method is eager and will execute the associated expression immediately.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

expr |

ibis.ibis.Table | The ibis expression to execute and persist to parquet. | required |

path |

str | pathlib.Path | The data source. A string or Path to the parquet file. | required |

params |

collections.abc.Mapping[ibis.ibis.Scalar, typing.Any] | None | Mapping of scalar parameter expressions to value. | None |

**kwargs |

typing.Any | Additional keyword arguments passed to pyarrow.parquet.ParquetWriter | {} |

https |

required |

to_pyarrow

to_pyarrow(self, expr, *, params=None, limit=None, **kwargs)

Execute expression and return results in as a pyarrow table.

This method is eager and will execute the associated expression immediately.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

expr |

ibis.ibis.Expr | Ibis expression to export to pyarrow | required |

params |

collections.abc.Mapping[ibis.ibis.Scalar, typing.Any] | None | Mapping of scalar parameter expressions to value. | None |

limit |

int | str | None | An integer to effect a specific row limit. A value of None means “no limit”. The default is in ibis/config.py. |

None |

kwargs |

typing.Any | Keyword arguments | {} |

Returns

| Type | Description |

|---|---|

| Table | A pyarrow table holding the results of the executed expression. |

to_pyarrow_batches

to_pyarrow_batches(self, expr, *, params=None, limit=None, chunk_size=1000000, **kwargs)

Execute expression and return an iterator of pyarrow record batches.

This method is eager and will execute the associated expression immediately.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

expr |

ibis.ibis.Expr | Ibis expression to export to pyarrow | required |

limit |

int | str | None | An integer to effect a specific row limit. A value of None means “no limit”. The default is in ibis/config.py. |

None |

params |

collections.abc.Mapping[ibis.ibis.Scalar, typing.Any] | None | Mapping of scalar parameter expressions to value. | None |

chunk_size |

int | Maximum number of rows in each returned record batch. | 1000000 |

Returns

| Type | Description |

|---|---|

| RecordBatchReader | Collection of pyarrow RecordBatchs. |

to_torch

to_torch(self, expr, *, params=None, limit=None, **kwargs)

Execute an expression and return results as a dictionary of torch tensors.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

expr |

ibis.ibis.Expr | Ibis expression to execute. | required |

params |

collections.abc.Mapping[ibis.ibis.Scalar, typing.Any] | None | Parameters to substitute into the expression. | None |

limit |

int | str | None | An integer to effect a specific row limit. A value of None means no limit. |

None |

kwargs |

typing.Any | Keyword arguments passed into the backend’s to_torch implementation. |

{} |

Returns

| Type | Description |

|---|---|

| dict[str, torch.torch.Tensor] | A dictionary of torch tensors, keyed by column name. |